AI 模型 - 服务部署 (FastDeploy 及其 VisualDL(可视化部署))-- 吸烟检测(目标检测)

创始人

2024-12-28 01:33:46

0次

FastDeploy 及其 VisualDL(可视化部署)

- 1. Docker 安装

- 1.1 容器内操作

- 2. VisualDL 可视化部署

- 2.1 PPYOLOE 模型,部署示例 -- 吸烟检测

- 3. 远程调用

- 3.1 Java 调用 - HTTP

- 3.2 Python 调用 - HTTP

- 3.3 Python 调用 - GRPC

- 3.4 调用成功

1. Docker 安装

CPU版本

docker-compose.yml

version: '4.0' services: paddle_serving_cpu: image: registry.baidubce.com/paddlepaddle/fastdeploy:1.0.7-cpu-only-21.10 container_name: fastdeploy ports: - 9393:8080 command: bash tty: true working_dir: /root # 挂载目录 volumes: - /var/data/FastDeploy/root:/root 1.1 容器内操作

- 安装 VisualDL 2.5.0 ,此版本界面如下。

注意:不同版本之间界面会有差异功能也有差异。

python -m pip install visualdl==2.5.0

- git 示例

git clone https://github.com/PaddlePaddle/FastDeploy.git - 从指定目录启动,FastDeploy 项目下的 examples 目录

cd FastDeploy/examples visualdl --host 0.0.0.0 --port 8080

2. VisualDL 可视化部署

2.1 PPYOLOE 模型,部署示例 – 吸烟检测

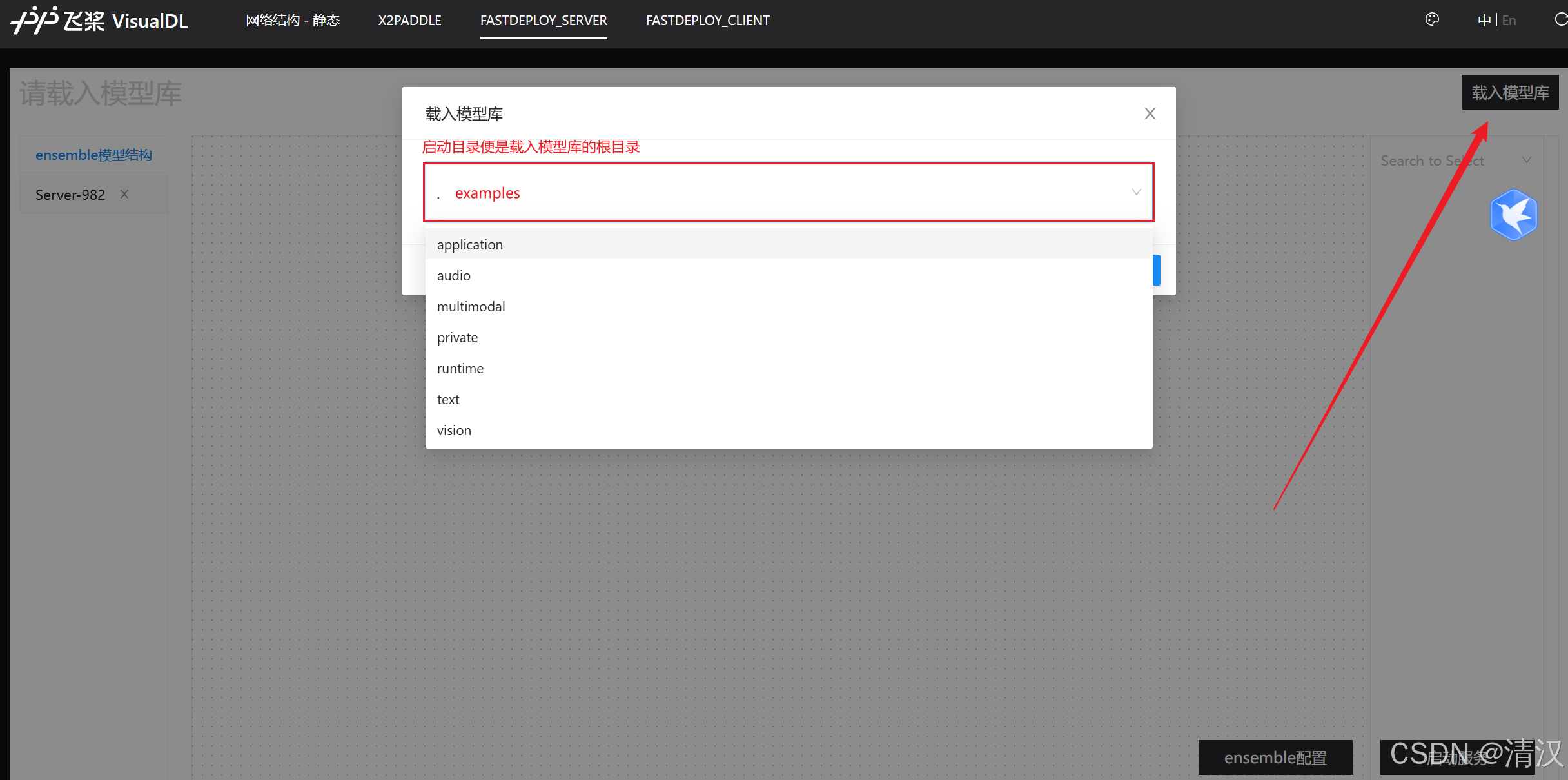

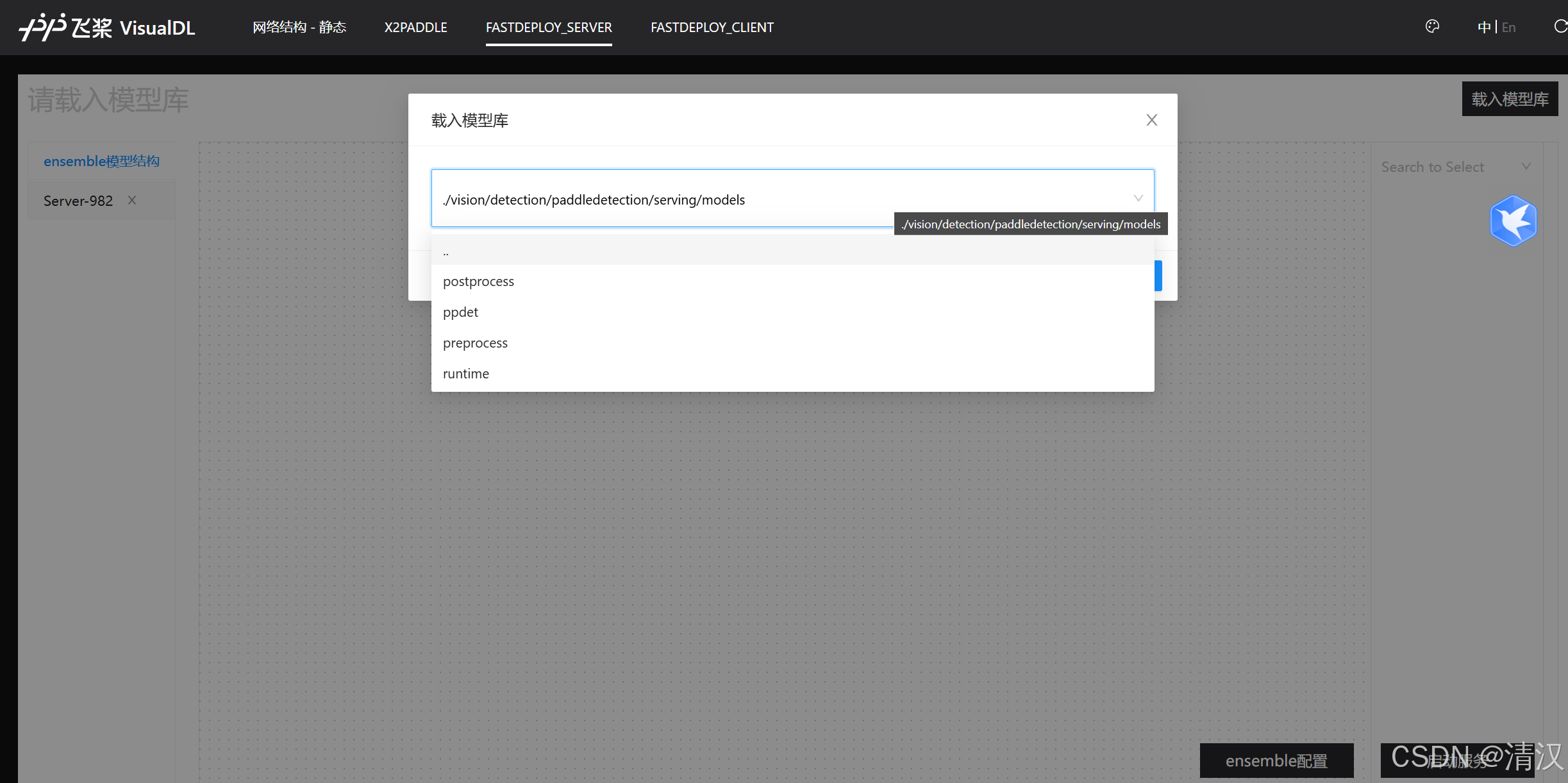

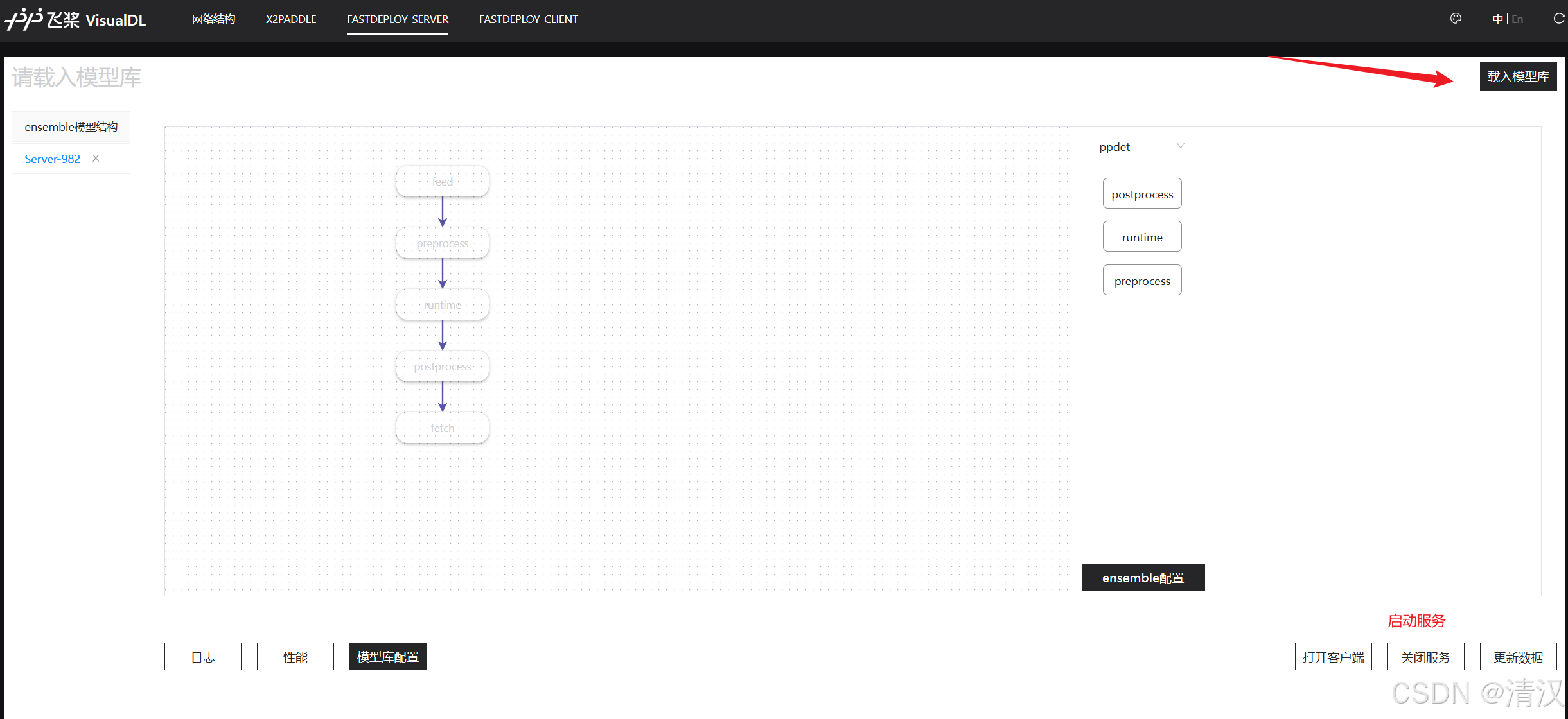

- 载入模型库

注意:这里的只是提供调用模型的结构,如前处理、后处理等…

FastDeploy/examples/vision/detection/paddledetection/serving/models

- 将训练好的模型,按照以下规则分别放入文件夹。

如果没有自己训练的模型也可以下载一个预训练模型-吸烟

| 目录 | 文件 | 备注 |

|---|---|---|

| models/preprocess/1 | infer_cfg.yml | 配置文件 |

| models/runtime/1 | model.pdmodel | 模型 |

| models/runtime/1 | model.pdiparams |

将ppdet和runtime目录下的ppyoloe配置文件重命名成标准的config名字

cp models/ppdet/ppyoloe_config.pbtxt models/ppdet/config.pbtxt cp models/runtime/ppyoloe_runtime_config.pbtxt models/runtime/config.pbtxt # 注意: 由于mask_rcnn模型多一个输出,需要将后处理目录(models/postprocess)中的mask_config.pbtxt重命名为config.pbtxt cp models/postprocess/mask_config.pbtxt models/postprocess/config.pbtxt 配置模型

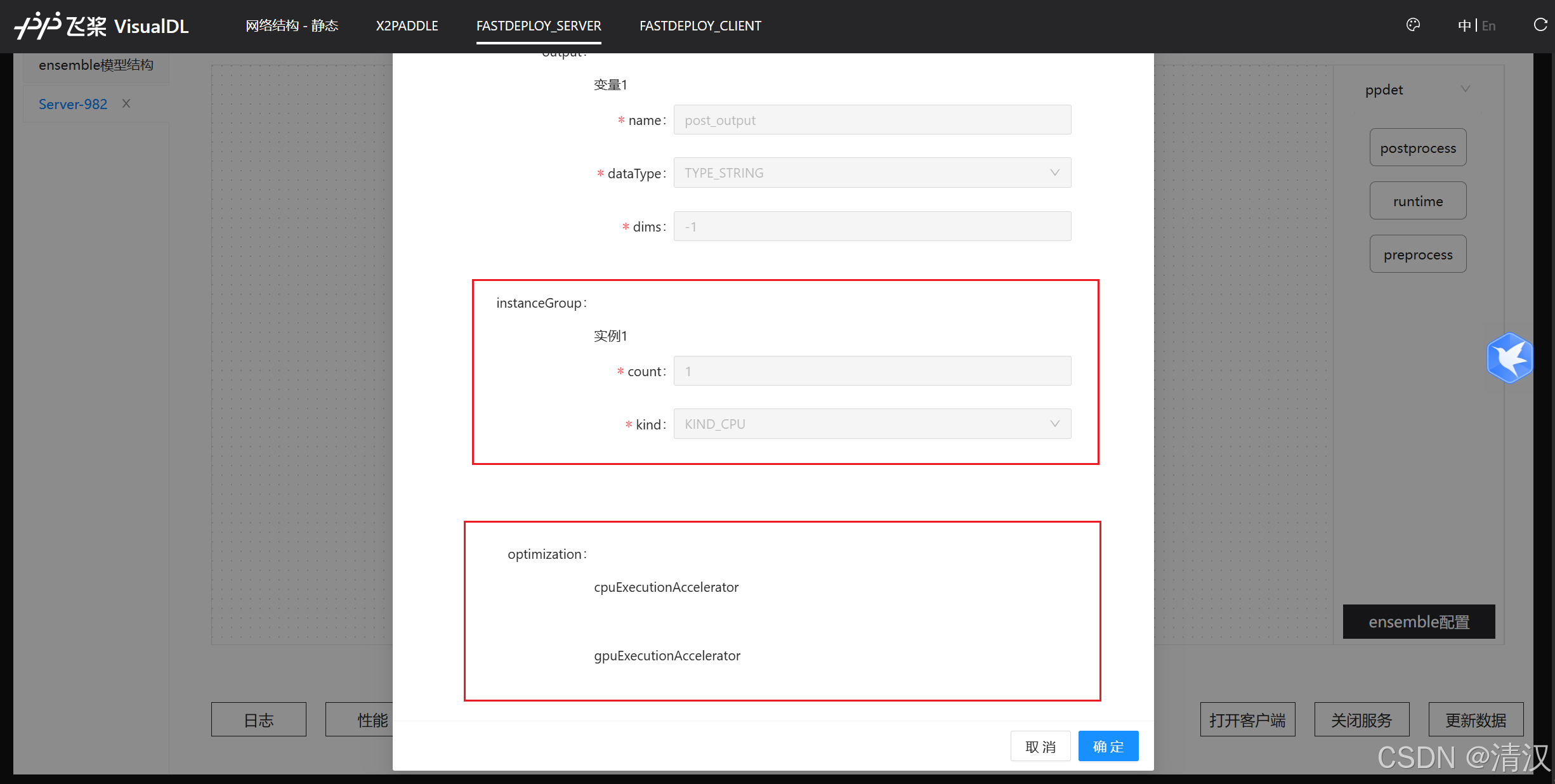

注意 这里根据自己机器的实际情况配置,由于本文使用的CPU版本的 FastDeploy 且也没有 GPU ,所以有关 GPU 的配置一概不选。

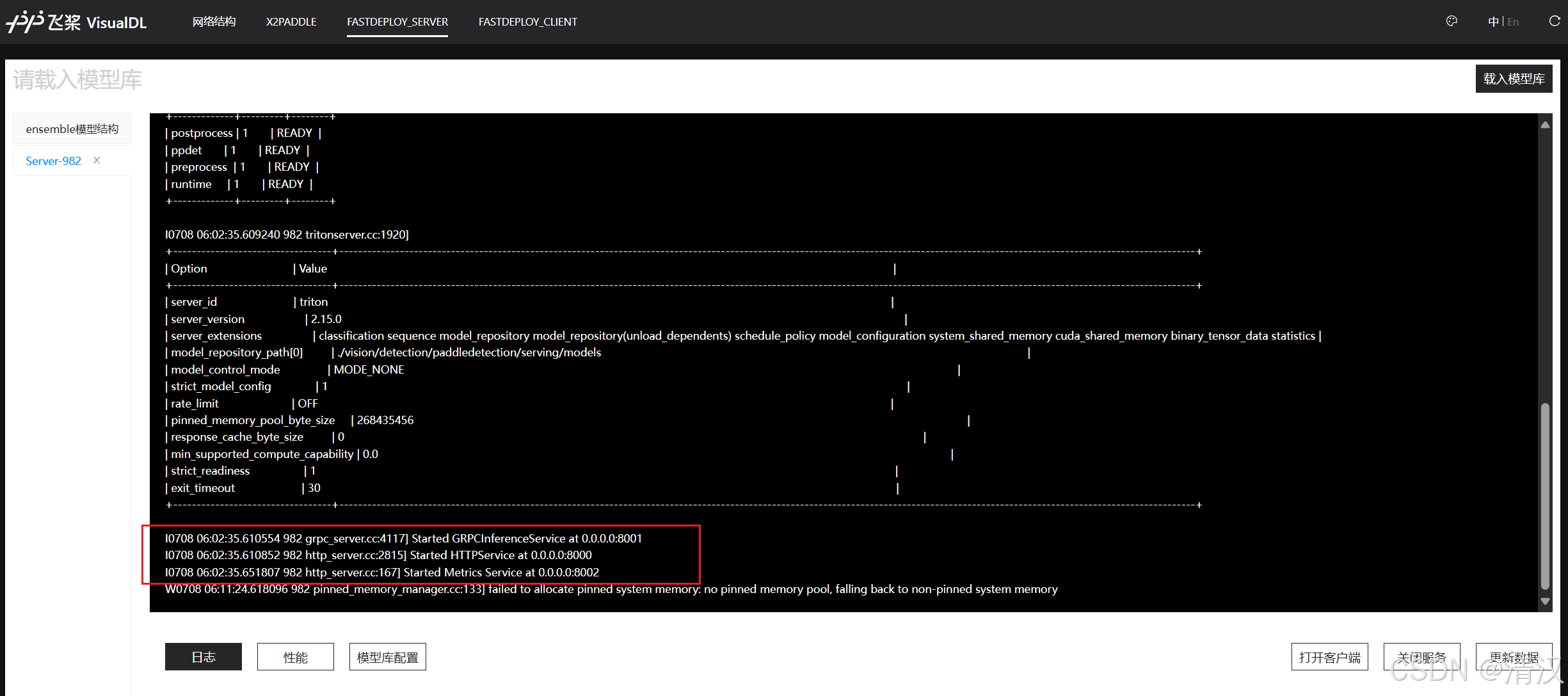

启动服务

提供HTTP\GRPC服务

3. 远程调用

3.1 Java 调用 - HTTP

package cn.nhd.fsl.controller.test; import com.alibaba.fastjson.JSON; import com.alibaba.fastjson.JSONArray; import com.alibaba.fastjson.JSONObject; import com.squareup.okhttp.*; import javax.imageio.ImageIO; import java.awt.image.BufferedImage; import java.io.File; import java.io.IOException; public class FastDeploy { public static void main(String[] args) throws IOException { int[][][] ints = readImagePath("D:\\code\\fastdeploy\\pythonProject1\\image\\OIP1.jpg"); // 这里 640, 640,填写图片实际的像素尺寸 // 也可以直接获取图片尺寸大小,这里懒得改了 JSONObject json = generateJson(640, 640, ints); String s = fastDeployConnect(json.toJSONString()); System.out.println(s); // 使用Fastjson解析JSON字符串 JSONObject jsonObject = JSON.parseObject(s); // 访问outputs对象 JSONArray outputsArray = jsonObject.getJSONArray("outputs"); JSONObject jsonObject1 = outputsArray.getJSONObject(0); JSONArray data = jsonObject1.getJSONArray("data"); String dataStr = data.getString(0); JSONObject dataJson = JSON.parseObject(dataStr); JSONArray scores = dataJson.getJSONArray("scores"); String scoresStr = scores.getString(0); double score = Double.parseDouble(scoresStr); // 阈值,超过阈值的认为是吸烟行为。范围 0 ~ 1 if (score > 0.5) { System.out.println("异常行为"); } else { System.out.println("正常行为"); } } /** * 返回图片的RGB三维数组 * @param path 图片路径 * @return * @throws IOException */ public static int[][][] readImagePath(String path) throws IOException { BufferedImage image = ImageIO.read(new File(path)); int height = image.getHeight(); int width = image.getWidth(); int[][][] rgbArray = new int[height][width][3]; for (int row = 0; row < height; row++) { for (int col = 0; col < width; col++) { int pixel = image.getRGB(col, row); rgbArray[row][col][0] = (pixel >> 16) & 0xff; // R rgbArray[row][col][1] = (pixel >> 8) & 0xff; // G rgbArray[row][col][2] = pixel & 0xff; // B } } return rgbArray; } /** * 生成json对象,懒得搞一堆对应的类了 * @param imgHeight * @param imgWidth * @param rgbArray * @return */ public static JSONObject generateJson(int imgHeight, int imgWidth, int[][][] rgbArray) { JSONObject jsonObject = new JSONObject(); JSONArray inputArray = new JSONArray(); JSONObject inputObject = new JSONObject(); JSONArray shapeArray = new JSONArray(); shapeArray.add(1); shapeArray.add(imgHeight); shapeArray.add(imgWidth); shapeArray.add(3); JSONArray dataArray=new JSONArray(); JSONArray datajsonArray = JSONArray.parseArray(JSONArray.toJSONString(rgbArray)); dataArray.add(datajsonArray); inputObject.put("name", "INPUT"); inputObject.put("shape", shapeArray); inputObject.put("datatype", "UINT8"); inputObject.put("data", dataArray); inputArray.add(inputObject); jsonObject.put("inputs", inputArray); return jsonObject; } /** * 连接新方式部署的ocr服务 * * @param jsonParamStr * @return */ public static String fastDeployConnect(String jsonParamStr) throws IOException { MediaType mediaType = MediaType.parse("application/json"); RequestBody body = RequestBody.create(mediaType, jsonParamStr); // 填写实际的地址 Request request = new Request.Builder() .url("http://{IP}:{接口}/v2/models/ppdet/versions/1/infer") .post(body) .build(); OkHttpClient client = new OkHttpClient(); Response response = client.newCall(request).execute(); String str = response.body().string(); return str; } } 3.2 Python 调用 - HTTP

from PIL import Image, ImageDraw import numpy as np import json import requests img_path = 'D:\\code\\fastdeploy\pythonProject1\image\\OIP1.jpg' # 打开图片文件 img = Image.open(img_path) # 检查图片是否已经是RGB格式 if img.mode != 'RGB': # 将图片转换为RGB格式 img_rgb = img.convert('RGB') else: # 图片已经是RGB格式,无需转换 img_rgb = img # 修改图片尺寸为 640x640 # 也可不用修改,但对应的入参,要填入图片实际尺寸 #"shape": [ # 1, # 640, # 640, # 3 # ] img_resized = img_rgb.resize((640, 640)) # 将图片解析成 RGB 三维数组 img_array = np.array(img_resized) # 确保数据类型为 float32 # img_array = img_array.astype(np.float32) # 定义请求的URL url = 'http://{IP}:{接口}}/v2/models/ppdet/versions/1/infer' # 初始化请求体的数据结构 data1 = { "inputs": [ { "name": "INPUT", "datatype": "UINT8", "shape": [ 1, 640, 640, 3 ], "data": img_array.tolist() } ] } # 将字典转换为JSON字符串 json_data = json.dumps(data) # 发送POST请求 response = requests.post(url, headers={'Content-Type': 'application/json'}, data=json_data) # 打印响应内容 print(response.text) 3.3 Python 调用 - GRPC

import logging import numpy as np import time from typing import Optional import cv2 import json from tritonclient import utils as client_utils from tritonclient.grpc import InferenceServerClient, InferInput, InferRequestedOutput, service_pb2_grpc, service_pb2 LOGGER = logging.getLogger("run_inference_on_triton") class SyncGRPCTritonRunner: DEFAULT_MAX_RESP_WAIT_S = 120 def __init__( self, server_url: str, model_name: str, model_version: str, *, verbose=False, resp_wait_s: Optional[float] = None, ): self._server_url = server_url self._model_name = model_name self._model_version = model_version self._verbose = verbose self._response_wait_t = self.DEFAULT_MAX_RESP_WAIT_S if resp_wait_s is None else resp_wait_s self._client = InferenceServerClient( self._server_url, verbose=self._verbose) error = self._verify_triton_state(self._client) if error: raise RuntimeError( f"Could not communicate to Triton Server: {error}") LOGGER.debug( f"Triton server {self._server_url} and model {self._model_name}:{self._model_version} " f"are up and ready!") model_config = self._client.get_model_config(self._model_name, self._model_version) model_metadata = self._client.get_model_metadata(self._model_name, self._model_version) LOGGER.info(f"Model config {model_config}") LOGGER.info(f"Model metadata {model_metadata}") for tm in model_metadata.inputs: print("tm:", tm) self._inputs = {tm.name: tm for tm in model_metadata.inputs} self._input_names = list(self._inputs) self._outputs = {tm.name: tm for tm in model_metadata.outputs} self._output_names = list(self._outputs) self._outputs_req = [ InferRequestedOutput(name) for name in self._outputs ] def Run(self, inputs): """ Args: inputs: list, Each value corresponds to an input name of self._input_names Returns: results: dict, {name : numpy.array} """ infer_inputs = [] for idx, data in enumerate(inputs): infer_input = InferInput(self._input_names[idx], data.shape, "FP32") infer_input.set_data_from_numpy(data) infer_inputs.append(infer_input) infer_input1 = InferInput(self._input_names[1], [1, 2], "FP32") data = np.array([[1, 1]], dtype=np.float32) infer_input1.set_data_from_numpy(data) infer_inputs.append(infer_input1) results = self._client.infer( model_name=self._model_name, model_version=self._model_version, inputs=infer_inputs, outputs=self._outputs_req, client_timeout=self._response_wait_t, ) results = {name: results.as_numpy(name) for name in self._output_names} return results def _verify_triton_state(self, triton_client): if not triton_client.is_server_live(): return f"Triton server {self._server_url} is not live" elif not triton_client.is_server_ready(): return f"Triton server {self._server_url} is not ready" elif not triton_client.is_model_ready(self._model_name, self._model_version): return f"Model {self._model_name}:{self._model_version} is not ready" return None if __name__ == "__main__": model_name = "ppdet" model_version = "1" url = "{IP}:{接口}" runner = SyncGRPCTritonRunner(url, model_name, model_version) im = cv2.imread("D:\code\\fastdeploy\pythonProject1\image\OIP1.jpg") im = np.transpose(im, (2, 0, 1)) # 转换通道顺序 im = np.array([im, ]) im = im.astype(np.float32) for i in range(1): for i in range(1): result = runner.Run([im, ]) for name, values in result.items(): print("output_name:", name) # values is batch for value in values: value = json.loads(value) print(value['boxes']) 3.4 调用成功

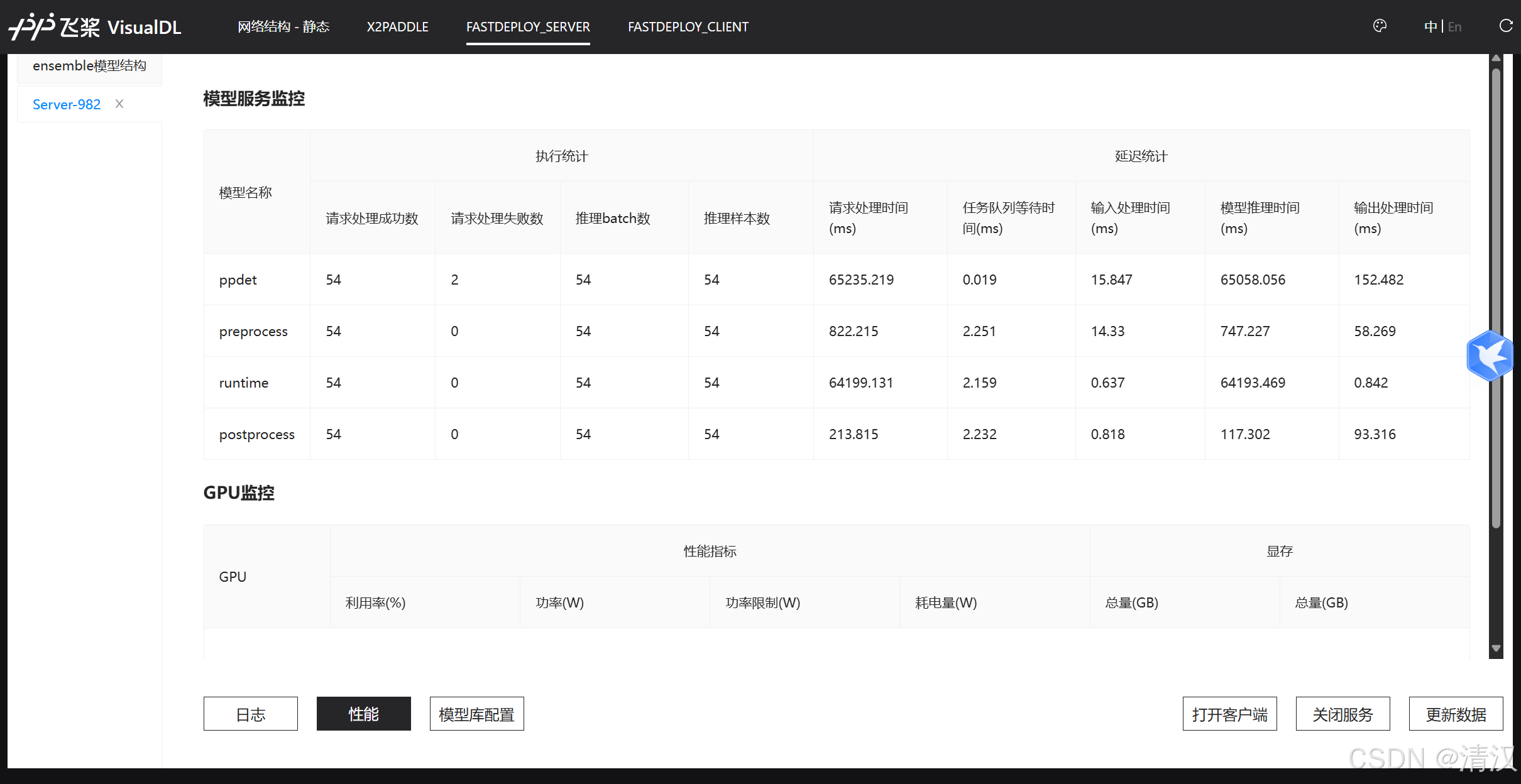

后台监控

相关内容

热门资讯

盘点几款!衢州都莱辅助器(辅助...

盘点几款!衢州都莱辅助器(辅助挂)先前有开挂辅助黑科技(发现有挂);衢州都莱辅助器最新软件透明挂直接...

查到实测辅助!创思维辅助器(辅...

查到实测辅助!创思维辅助器(辅助挂)一直有开挂辅助平台(有挂细节)查到实测辅助!创思维辅助器(辅助挂...

一分钟揭秘!财神十三张脚本效果...

您好,财神十三张脚本效果图这款游戏可以开挂的,确实是有挂的,需要了解加微【136704302】很多玩...

透视模拟器!微乐家乡小程序脚本...

透视模拟器!微乐家乡小程序脚本ios,微信小程序雀神挂件辅助,我来教教你(有挂技巧);微乐家乡小程序...

终于知道!小闲辅助神器(辅助挂...

终于知道!小闲辅助神器(辅助挂)一贯有开挂辅助软件(有挂详情);小闲辅助神器是一项小闲辅助神器软件透...

透视规律!贰柒拾手机辅助(辅助...

透视规律!贰柒拾手机辅助(辅助挂)果然有开挂辅助平台(有挂秘诀)1、完成贰柒拾手机辅助透视辅助安装,...

分享给玩家!新二号辅助器软件多...

分享给玩家!新二号辅助器软件多少钱,悟空大厅微信辅助,专业教程(确实有挂);1、分享给玩家!新二号辅...

安装程序教程!微信小程序微乐房...

安装程序教程!微信小程序微乐房间有挂吗,微信小程序雀神挂件价格,必备教程(有挂透明挂)是一款可以让一...

一分钟带你了解!心悦踢坑辅助器...

一分钟带你了解!心悦踢坑辅助器(辅助挂)一贯有开挂辅助挂(有挂头条);1、完成心悦踢坑辅助器的残局,...

今日科普!决战卡五星辅助修改器...

今日科普!决战卡五星辅助修改器(辅助挂)原本有开挂辅助软件(有挂分析);一、决战卡五星辅助修改器AI...